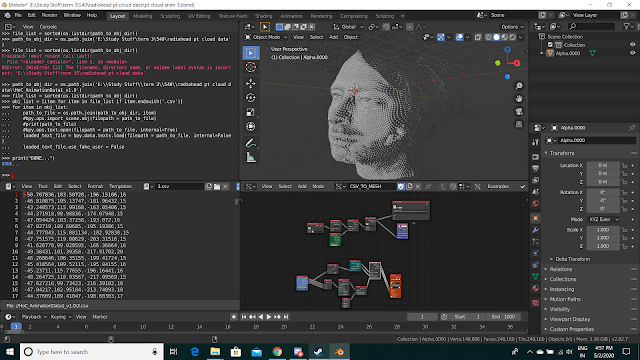

Today I spent most of the day working on the Radiohead video to get a better grasp and understanding of what will and won't work. I linked the process of getting the data into Blender in my previous post, and i successfully managed to get it into Blender and animate it. Here's a screenshot: Note: if you do try attempting this, make sure to use Sverchok Node addon. It's basically visual programming. Now there were 1000 csv files, so I naturally imported all of them, being the dumbass I am. I'm not sure how, but I managed to run out of space on my laptop, so I made every change carefully, because as it turns out, Blender does not like to handle these many csv files, and every change added up to the total file size (even deletion!) Anyway, everything went fine and dandy up to this point. In the midst of all this, I realized that importing alembic cache animations in UE is really easy! So I tried to export this data as an alembic file, hoping that it'd work.